Disinformation – False information spread in order to deceive people.

Background:

Disinformation differs from misinformation in that it is intentionally misleading or false information spread to deceive.

Disinformation poses a significant challenge for enthusiasts seeking reliable, peer-shared information. This post examines a recent example to illustrate how disinformation can distort technical discussions and offers strategies to identify and counteract such tactics.

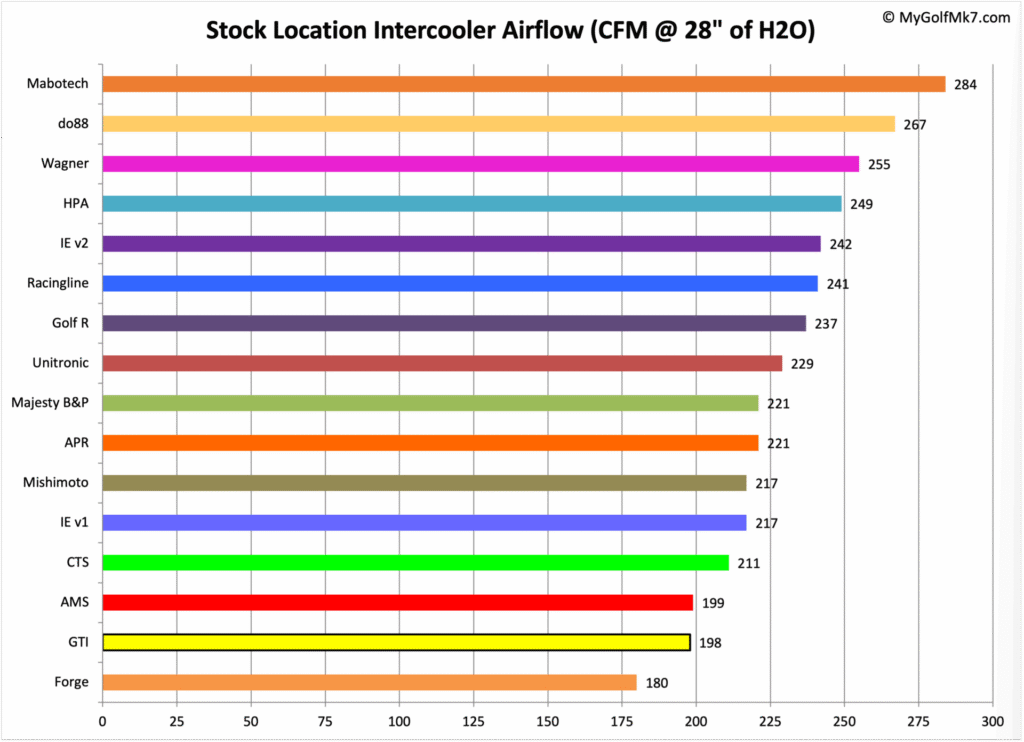

In a recent discussion about the Mabotech intercooler, a participant referenced a flow test demonstrating that the intercooler exhibits a relatively low pressure drop compared to other Mk7-compatible options.

A flow bench is ideally suited to measuring the flow rate through an intercooler.

An air flow bench is a device used for testing the internal aerodynamic qualities of an engine component and is related to the more familiar wind tunnel.

In this case, Romario interpreted the test results to conclude that the Mabotech intercooler was the best-flowing among the tested options. (Below)

This post reviews Chris Vogl’s counterargument, which challenges Romario’s conclusion. I will examine whether Vogl’s claims are supported by evidence or rely on disinformation tactics.

Disinformation tactics:

Some signs of disinformation to keep in mind during this review:

- Using logical fallacies to distract from the core argument.

- Relying on opinions rather than verifiable evidence.

- The use of sarcasm or insults to discredit valid information.

Reviewing the counterpoint:

The following review centers around a Red Herring logical fallacy.

Logical fallacies are flawed reasoning used to make an argument appear stronger than it really is and deviate from discussing the flow test.

A red herring fallacy is a form of logical fallacy or reasoning error that occurs when a misleading argument or question is presented to distract from the main issue or argument at hand.

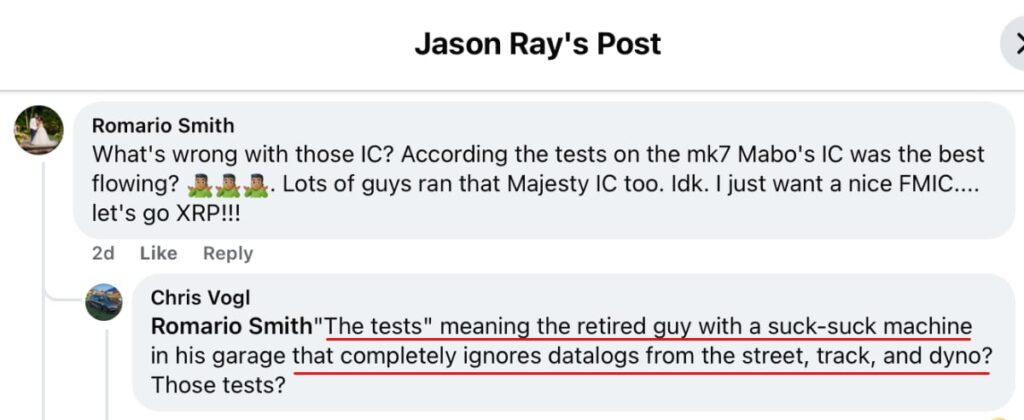

Chris Vogl begins by stating that the intercooler’s flow test is the subject of his rebuttal. He does this by stating “The tests” in quotes (shown below).

Irrelevant personal attack:

Immediately after referencing the flow test, Chris employs an Ad Hominem personal attack (a form of Red Herring fallacy) to divert attention from the flow test results.

Chris’s fallacy starts with a false and irrelevant claim about my employment status. Even if this statement were true, it would not affect the validity of the flow test result.

“The tests” meaning the retired guy…

Chris Vogl

Mischaracterizing the Testing Method:

Next, Chris describes the test usage of a PTS flow bench as having been conducted with a “suck-suck machine.” Chris’s non-standard terminology indicates a potential misunderstanding of the device’s operation. Thus, Chris fails to make a sound argument against the measurement results.

“The tests” meaning the retired guy with a suck-suck machine…

Chris Vogl

Ignoring Published Evidence:

Chris continues the Red Herring with a series of irrelevant accusations. These begin with a false claim that I “completely ignore datalogs” from the street. This claim is unrelated to the validity of the flow test.

This claim is contradicted by multiple street-test results published on this site over the past five years

“The tests” meaning the retired guy with a suck-suck machine inj his garage that completely ignores datalogs from the street…

Chris Vogl

Chris then falsely claims I ignore “track datalogs.” This is another claim that is irrelevant to the validity of the flow bench test.

The subsequent false and irrelevant claim is that I ignore dynamometer-generated data. Whether or not I use dynamometer-generated data has no bearing on the validity of the intercooler flow test.

The tests” meaning the retired guy with a suck-suck machine in his garage that completely ignores datalogs from the street, track, and dyno?

Chris Vogl

This claim is easily disproven by numerous dynamometer test results published on this site.

At this point, Chris has failed to make any valid or relevant point about the flow test and concludes by saying, “Those tests?” without having made any counterargument about the tests he refers to.

Counter Argument Summary:

Chris Vogl appears to be responding to a question about the performance of the Mabotech intercooler during a flow test. Still, Chris’s response relies on disinformation tactics rather than engaging with the test data.

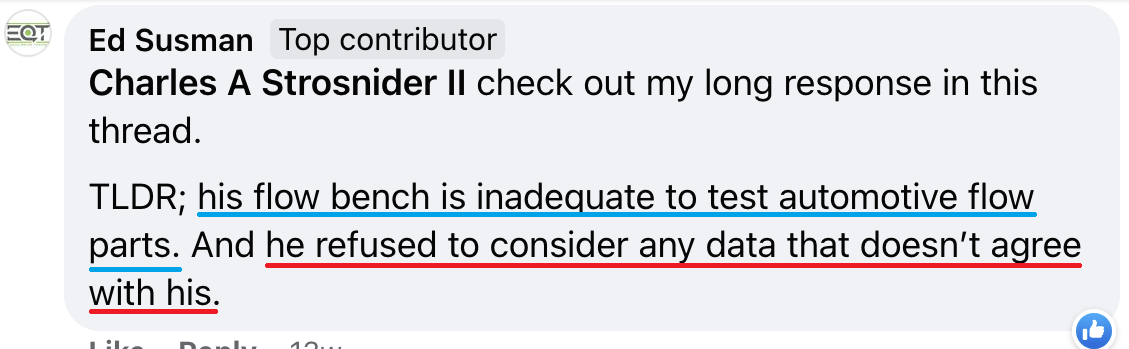

The same disinformation claims can be traced to statements previously made by Ed Susman, the owner of aftermarket parts supplier Equilibrium Tuning, as shown in the two examples below.

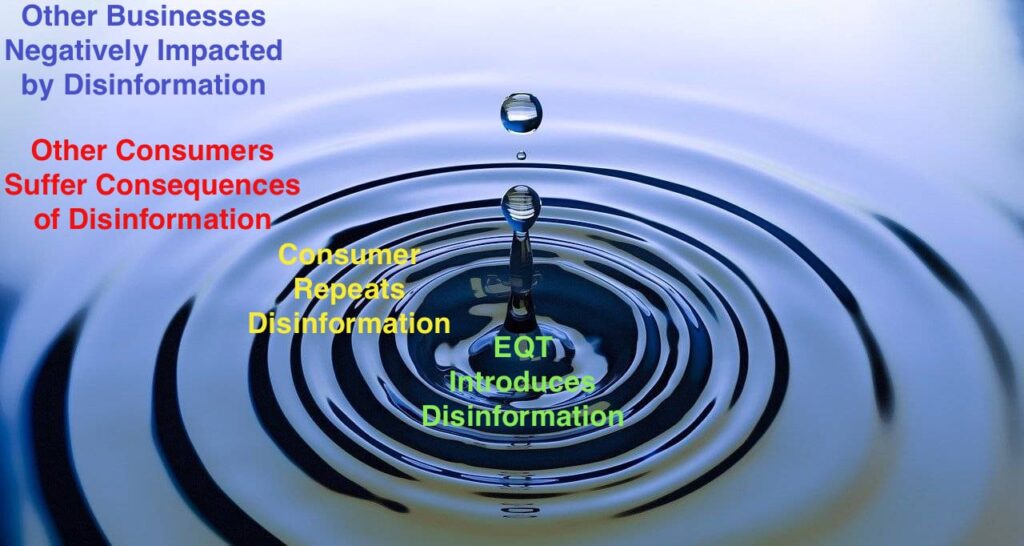

The evidence above illustrates that when bad actors join discussions and introduce disinformation, the negative impacts can be far-reaching.

In this example, Ed Susman of Equilibrium Tuning introduced disinformation to consumers interested in the Mk7 series of VW automobiles.

Chris Vogl repeated Ed’s false statements to Romario Smith and other consumers, who were discussing the Mabotech stock location intercooler. The disinformation was meant to cast doubt on the validity of the flow test information, which could have affected their decisions regarding the Mabotech intercooler.

If these consumers had believed the disinformation, they might have viewed Mabotech’s product less favorably and chosen another lesser-performing product, which would then negatively affect Mabotech’s business.

Conclusion:

The disinformation illustrated in this post did not originate with Chris Vogl. Evidence indicates it was initially spread by Ed Susman, owner of Equilibrium Tuning, and subsequently echoed by Vogl.

Using disinformation to undermine competitors and mislead consumers is unethical and damaging to market integrity.

is there a reason you dont just install these intercoolers and do a real world test? why should anyone blindly accept the data you put out?

Is this a joke?

No not at all, why would it be?

At this moment the top post on the blog is a Street Data comparison of the ARM FMIC and ARM Bicooler. Also, there are pages summarizing street data logged with stock location ICs and Front Mounted ICs.

You made a comment about ‘blindly‘ accepting data, when you haven’t noticed the top post on the site talks about evaluating an intercooler in a ‘real world test‘, or that there are pages dedicated to summarizing two dozen different intercooler setups tested in the ‘real world‘. There’s a bit of irony in asking about ‘blindly’ accepting data when you are oblivious to the data that is contained on this site.

Jeffrey,

Thank you for replying, even though it comes across a little pointed it feels as though you don’t want any discourse, but I digress I believe you misunderstood what I was asking. My point wasn’t about whether any real-world testing has ever been done — it was to ask why the primary focus isn’t on a more direct, hands-on installation and testing approach. Although your logged data is informative that tells a story, it does not always tell the entire story, including elements like heat soak from prolonged use, as well as installation difficulties and long-term performance differences that a methodical, firsthand test might expose. As a result i’ve seen firsthand examples of your test being widely used to spread misinformation. I’m not blaming you for this as I’m sure that’s not you intent.

Furthermore, that closely followed my passing comment of “blindly accepting data” which wasn’t intended to belittle the data there but much more to scrutinize the methodology and provoke more transparency. Having data doesn’t mean it shouldn’t be examined with a critical eye, and questioning methods is a part of that critical evaluation. My question was intended to promote debate, not to dismiss previous work.

I’ll Happily review any direct installation test you’ve done that touch these concerns if available. But I still believe that stepping beyond the logged data you present to validate the results has merit.

1. “why the primary focus isn’t on a more direct, hands-on installation and testing approach” – All of the parts that have been installed on my car for testing were installed by me. All of the tests that have been performed over the past seven years with my car have been done by me. How much more direct and hands-on can they get?

2. “it does not always tell the entire story” – True. Any test program has the potential to bankrupt a project. I don’t profit from conducting the tests and sharing the information with other owners. Limits on the tests are a consequence. To date nobody has offered to financially support more robust testing, so the tests are limited by how many resources I am willing to put into them.

3. “i’ve seen firsthand examples of your test being widely used to spread misinformation” – Me too. I can’t stop other people from doing that.

4. “much more to scrutinize the methodology and provoke more transparency.” – For nearly every test I offer the Background to explain what prompted the test. A Test Process description in which I mention relevant articles under test along with the method of data collection and the data of interest. Then I present the Test Results, and lastly, give my Conclusions about the test. I’m open to answering questions about the methods, but what do you find lacking to justify saying there should be ‘more transparency‘?

I have not counted the number of tests that are published on the site, but there is no shortage to chose from. If you want to identify one to ask questions about I am happy to answer specific questions.